Generative

-

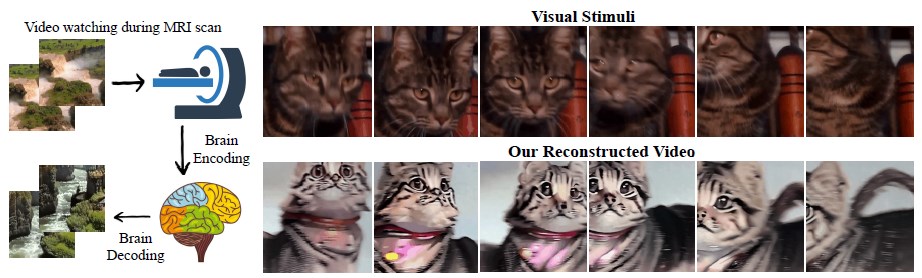

MinD-Video model creates high-quality videos from your brain activity

MinD-Video is a new technology that can generate high-quality videos from brain signals.…

-

Make-An-Animation: a U-Net based diffusion model for 3D human motion generation

Make-An-Animation is a new text-to-motion generation model that creates realistic and diverse 3D…

-

DragGAN: edit images by simply dragging some points on them

DragGAN (Drag Your GAN) is an interactive method for editing GAN-generated images by simply dragging some…

-

How Stability AI is advancing open-source AI with StableStudio

Stability AI, a leading company in the field of generative AI, has announced…

-

Nvidia’s new high resolution text-to-video synthesis with Latent Diffusion Models

The AI Lab of Nvidia in Toronto launched a text-to-video generation model that uses…

-

DreamPose: fashion image-to-video generation via Stable Diffusion

DreamPose is a new method that generates fashion videos from still images, through…

-

NeRF-Supervised, a novel technique for training stereo models

Google introduced NeRF-Supervised, a new framework for training deep stereo networks without requiring any…

-

Painting with sound and emotion: the Frida synesthesia

A new model that uses sound and speech to guide the robotic painting…

-

Text2Video-Zero: text-to-video diffusion models without any training or optimization

Text2Video-Zero is a new low-cost approach to produce videos from text prompts without the…

-

Ultra Fast ControlNet with Hugging Face Diffusers

Ultra Fast ControlNet with Hugging Face Diffusers is a new technology that allows…