Researchers from MIT, TUM, and Harvard University used a learned model to create better hash functions that accelerate data retrieval in large databases.

Hashing is a critical component in numerous applications, including data security, indexing databases, compressing data, and cryptography.

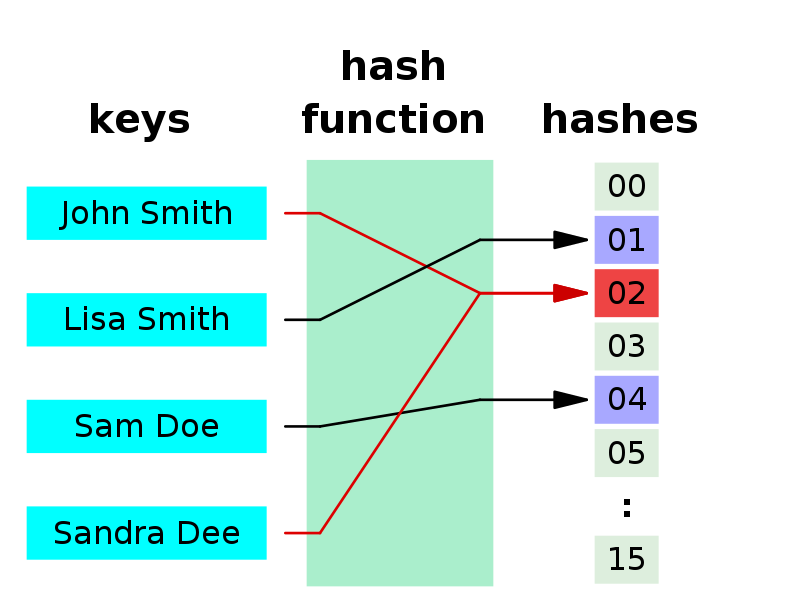

It involves taking any input data, regardless of its size, and transforming it into a fixed-size output value, known as a hash.

The problem

The main problem with hashing is the possibility of collisions. As traditional hash functions generate codes randomly, it is possible for two distinct pieces of data to produce the same hash value.

Collisions can lead to reduced performance, longer search times, and even security vulnerabilities, especially in applications where data integrity is critical.

To reduce the likelihood of collisions, various approaches are available, including chaining, probing, cuckoo hashing, or the use of perfect hash functions.

A perfect hash function generates a unique hash value for each distinct input value, without any collisions. However, they are difficult to create, especially for large input sets.

Learned models

The authors of the paper set out to explore the possibility of improving hash functions by replacing traditional hash functions with trained models.

In addition, the study aimed to investigate whether reducing the number of collisions would improve performance, particularly in indexing and joining large databases.

The learned models referred to in the study were developed by applying machine learning algorithms to identify specific features in the dataset.

Evaluation

The study employs both real and synthetic datasets consisting of 64-bit integer keys. For the real key datasets, the researchers utilized four datasets from the SOSD benchmark, including FB, wiki, osm, and book, each containing 200 million keys.

The study employs four distinct methods to generate synthetic keys: the gap_10, uniform, normal, and lognormal processes.

to the wiki, fb, osm and book datasets

- In gap_10, sequential keys are first generated at regular intervals of 10, and then 10% of the keys are randomly deleted.

- Uniform keys are generated randomly in the range [0, 250].

- Normal and lognormal processes the keys are generated from normal and lognormal distributions, respectively, and then linearly scaled to the range [0, 250].

Note: Hash functions that produce a uniform distribution of gap lengths are generally considered more effective in minimizing collisions and achieving optimal performance.

Results

The results revealed that in certain scenarios, using learned models resulted in a 1.4x reduction in probe latency. This means that the time taken to search for a key in the hash table has decreased.

Also, it achieved a 28% decrease in the runtime of non-partitioned hash joins, compared to the next best baseline, for select datasets. This suggests that the use of learned models as hash functions can reduce the time taken to execute a type of join operation between two tables in a database, where the tables are not partitioned before the join.

The team’s experiments also demonstrated that these learned models were frequently more computationally efficient than perfect hash functions.

Conclusion

The findings suggest that while learned models can successfully decrease the collisions in certain scenarios, the outcomes are dependent on the way data is distributed.

They also may be able to capture more complex relationships between data points than the simple hash functions.

Expanding on their analysis, the researchers aim to utilize learned models to design hash functions for different types of data and investigate the use of learned hashing in databases that allow for dynamic changes such as data insertion or deletion.

Whether learned models can replace hash functions entirely is subject to debate and would depend on various factors such as the specific use case, the size and type of data, and the resources available for implementation.

Learn more:

- Research paper: “Can Learned Models Replace Hash Functions?” (on ACM)

- Story source: “New method accelerates data retrieval in huge databases” (on MIT News)