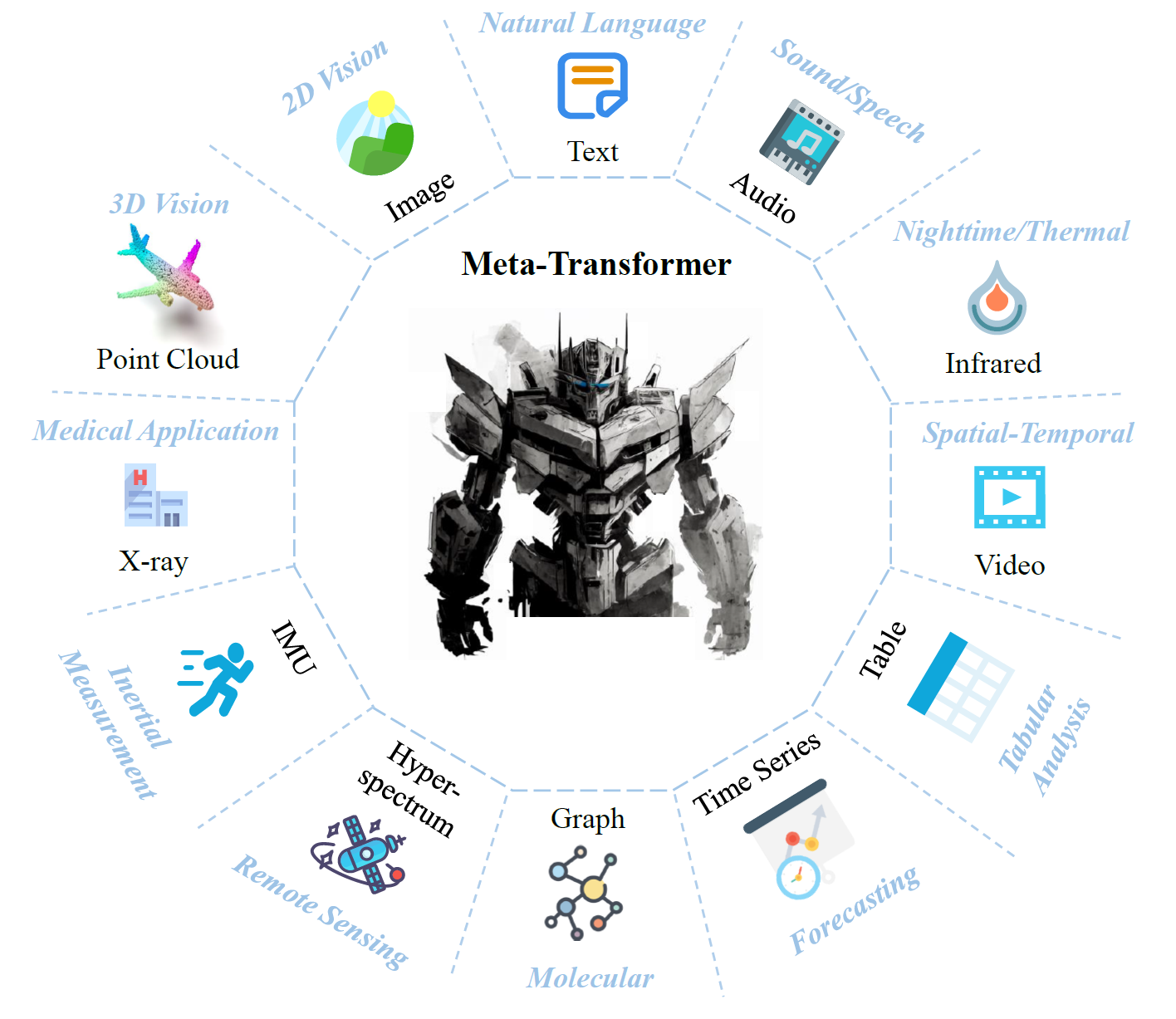

Meta-Transformer is a new framework for multimodal learning. It uses a single network to encode 12 modalities: natural language, images, point clouds, audios, videos, tabular data, graph, time series data, hyper-spectral images, IMU, medical images, and infrared images. Meta-Transformer can handle a wide range of tasks across these modalities without requiring any paired multimodal training data.

Meta-Transformer works by converting any type of data into a common format called tokens (pieces of information). It then uses a single network (encoder) to process these tokens and learn how to relate them together.

It can be used for a wide range of applications such as generating captions for images, synthesizing speech from text, or answering questions from videos.

The new framework was proposed by a research team from the Chinese University of Hong Kong and Shanghai AI Lab.

The authors demonstrate the effectiveness of Meta-Transformer on various benchmarks and show that it can achieve competitive or even superior performance compared to existing methods.

In the table below we can see the Meta-Transformer and related works on perception tasks:

| Method | Modalities | Share Parameters | Unpaired Data |

|---|---|---|---|

| Transformer | Text | ✘ | ✘ |

| ViT, Swin Transformer, MAE | Image | ✘ | ✘ |

| Point Transformer, PCT, Point ViT | Point Cloud | ✘ | ✘ |

| AST, SSAST | Audio | ✘ | ✘ |

| CLIP, Flamingo, VLMO, OFA | Text, Image | ✘ | ✘ |

| BEiT-3 | Text, Image | Serial Layers | ✘ |

| ImageBind | Text, Image, Point Cloud, Video, Infrared Image, IMU | ✘ | ✘ |

| Meta-Transformer | Text, Image, Point Cloud, Audio, Video, Tabular Data, Graph Data, Time-Series, Hyperspectral, IMU, Medical Image, Infrared Image | Whole Backbone | ✔ |

The challenge

The human brain can process and relate information from different senses, such as vision, hearing, touch, smell and taste. In the same way, the multimodal learning aims to build models that can handle data from multiple sources and modalities.

Multimodal learning is important for many applications, such as image captioning, speech recognition, video understanding, and emotion recognition. These applications require models that can understand and relate information from different modalities, such as text, images, audio, and video.

This is a challenging task, because the data sources have different formats, structures, patterns and meanings. For example, images are made of pixels arranged in a grid, while natural language is made of words arranged in a sequence.

Moreover, traditional methods for multimodal learning often require paired data or modality-specific networks, which limit their versatility and efficiency.

Meta-Transformer

Meta-Transformer overcomes these limitations by using a unified network that can process different types of data without requiring paired data. It’s like a universal translator that can convert any type of data into a common format that it can understand and process.

The diagram below shows how Meta-Transformer can handle different modalities with unpaired data using a single network.

The diagram consists of three parts: the input data, the Meta-Transformer network, and the output data.

- The input data are examples of different modalities that Meta-Transformer can handle, such as natural language, image, point cloud, audio spectrogram, etc.

- The Meta-Transformer network relates different types of data together (cross-modal relationships).

- The output data are examples of different tasks, such as classification, detection, segmentation.

The Meta-Transformer network

Meta-Transformer network consists of three simple components:

- A unified data-to-sequence tokenizer that converts the raw input data from various modalities into a common embedding space. For example, it can transform an image into a sequence of tokens that represent patches or regions of the image.

- A modality-shared encoder that takes these tokens as input and applies layers of attention to extract high-level semantic features of the input data. The encoder is frozen, meaning that its parameters are fixed and do not change during training or inference. This allows the encoder to be reused for different modalities and tasks without any fine-tuning.

- Task-specific heads for downstream tasks such as classification, segmentation, detection, etc. They are trained on top of the modality-shared encoder, and they are specific to the task at hand.

The next diagram shows the tokenizer (the first component):

The raw input data from various modalities are converted into tokens that are compatible with the modality-shared encoder.

- for text data, it uses a word-level tokenizer that splits the text into words and assigns each word a unique token ID

- for image data, it uses a patch-level tokenizer that divides the image into patches and encodes each patch with a linear projection

- for point cloud data, it uses a voxel-level tokenizer that discretizes the point cloud into voxels and encodes each voxel with an embedding vector

- for audio data, it uses a frame-level tokenizer that segments the audio into frames and encodes each frame with a Fourier transform

Experiments

Meta-Transformer was evaluated on a wide range of modalities and compared with current state-of-the-art methods. Modality-Specific SOTA are the state-of-the-art methods or models that are designed for a specific type of data, such as text, image, audio, video, etc.

Below we can see some results. You can find more on the project page and paper.

The researchers showed that it can achieve competitive or even superior results compared to existing methods for different tasks like text understanding, image understanding, infrared and hyperspectral data understanding.

Conclusion

Meta-Transformer is a flexible framework that can perform unified learning across 12 modalities with unpaired data. It is able to learn how to relate different types of data together.

It has been shown to be effective on a variety of multimodal learning tasks.

Learn more:

- Research paper: “Meta-Transformer: A Unified Framework for Multimodal Learning” (on arXiv)

- Project website

- GitHub repository