MinD-Video is a new technology that can generate high-quality videos from brain signals. The model could help us better understand the brain function and answer unsolved problems in cognitive neuroscience.

The model was proposed by a research team from the the National University of Singapore and the Chinese University of Hong Kong.

The model creates videos with high accuracy, setting a new standard in this area. It learns how to map the spatiotemporal patterns of the brain by using the continuous functional magnetic resonance imaging (fMRI) data of the brain cortex.

According to the paper, Mind-Video achieves an average accuracy of 85% in semantic classification tasks and 0.19 in structural similarity index (SSIM), outperforming the previous state-of-the-art by 45%.

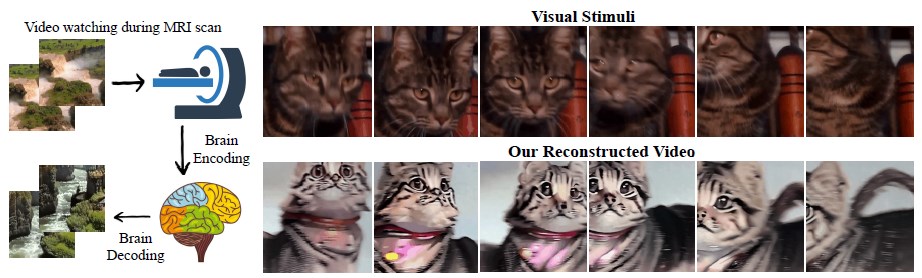

In the image below we can see how MinD-Video recreates a video with a cat from the brain that is stimulated by the visual input.

Our life experience is like a movie that plays in our mind, with each moment smoothly merging into the next. But how does our brain create this movie? How does it encode and decode the visual information that we see? This is a question that cognitive neuroscientists try to answer.

One way to do this is to recreate human vision from brain waves using fMRI. This is a type of brain scan that measures the blood flow in the brain, which reflects the neural activity.

However, it is a difficult task, because fMRI is much slower than video. A typical video has 30 frames per second (FPS), which means it changes 30 times per second. But fMRI takes 2 seconds to capture one frame of brain activity.

So, one fMRI frame corresponds to 60 video frames. How can we match these different speeds and recreate human vision from brain waves?

This is the challenge that the researchers faced in this study.

Methodology

The team proposed a model made of two parts that can work together or separately (see picture below):

- The first part is the fMRI encoder, which converts the brain data into a 2D image representation that contains information about what the participants saw.

- The second part is the video generative model, which creates realistic and smooth videos from the 2D image representation.

They trained the two parts separately and then fine-tuned them together, which makes the model flexible and adaptable.

Main steps

I. The fMRI pre-processing and region selection: First, the fMRI data captures brain activity using blood-oxygen-level-dependent (BOLD) signals. The blood flow shows which parts of the brain becomes active when someone sees something. For further analysis, they select the regions of interest (ROIs), focusing on the regions of the brain that processes visual information.

II. Pre-training and Training of the fMRI encoder: The fMRI encoder followed a progressive learning process starting from general features to specific features of the visual cortex.

- The fMRI encoder learns the general features of the visual cortex through a process of large-scale pre-training with masked brain modeling (MBM). The fMRI data is rearranged and transformed into tokens.

- A spatiotemporal attention layer is used to handle the time delay between the brain signals and the visual stimuli. This layer processes multiple fMRI frames in a sliding window, considering both spatial and temporal correlations.

- The fMRI encoder learns the specific features of the visual cortex. It is trained with {fMRI, video content, corresponding textual captions} triplets. The purpose of using these triplets is to align the fMRI embeddings with a shared CLIP space.

III. Video generation using an augmented Stable Diffusion model to generate videos from the fMRI embeddings. A Scene-Dynamic Sparse Causal (SC) attention mechanism is used for video smoothness.

IV. Fine-tuning the fMRI encoder and the video generative model together using labeled data. The whole self-attention, cross-attention, and temporal-attention heads are tuned during this phase.

Overall, this method involves preprocessing the brain data, training an fMRI encoder, generating videos using a specialized model, and fine-tuning the models based on labeled data.

The datasets

The researchers used two types of data: one for pre-training the fMRI encoder and one for fine-tuning and evaluating the video generative model.

- Pre-training dataset Human Connectome Project, which is a large collection of brain data from different people and tasks. The researchers used 600,000 fMRI segments from a big amount of fMRI scan data.

- Paired fMRI-Video dataset that has fMRI data and video clips from three people who watched different things such as animals, humans, and natural scenery. The dataset has two parts: training data with 18 video segments and 4,320 fMRI-video pairs, and test data with 5 video segments and 1,200 fMRI-video pairs.

Evaluation

They evaluated the Mind-Video framework with various semantic and pixel-level metrics, such as structural similarity index (SSIM), semantic classification accuracy, and image captioning.

They also compare their framework with three other methods of video reconstruction from brain activity and show that their framework outperforms the previous state-of-the-art by 45%.

Conclusion, future research

MinD-Video is a method that can create high-quality videos using data from fMRI brain scan and it is able to reconstruct videos that semantically matched the original videos.

The proposed model can help us to better understand the brain activity and to develop brain-computer interfaces using brain signals.

As this approach focuses on the brain parts that handle the visual information, future research might explore the whole brain activity.

There are also ethical considerations regarding privacy and responsible use of this technology.

Learn more:

- Research paper: “Cinematic Mindscapes: High-quality Video Reconstruction from Brain Activity” (on arXiv)

- GitHub repository

- Project page