Large language models are rapidly entering the healthcare space. But how do we know if they’re truly effective at answering medical questions? To address this, OpenAi launched HealthBench (paper, code, blog), an open-source benchmark designed to evaluate the LLMs used in healthcare applications.

Existing benchmarks such as MMLU and MedQA rely on structured test formats and often miss the real-world nature of clinical conversations and may also lack direct validation by medical experts. HealthBench addresses these gaps by using physician-developed criteria that more accurately reflect the complexity and context of real clinical interactions.

The new benchmark is available at the simple-evals GitHub repository, providing datasets, evaluation scripts, and documentation to facilitate benchmarking and analysis of LLMs in realistic medical scenarios.

Contents

- HealthBench structure

- How HealthBench works

- OpenAI models on HealthBench

- Performance-cost frontier

- Performance by themes and axes

- Overall results: who’s winning?

- How to use

- Conclusion

- References

- Recommended books

HealthBench structure

- Dataset composition: HealthBench consists of 5K simulated health-related conversations between LLMs and healthcare professionals, covering 26 medical specialties. These conversations are designed to reflect real-world scenarios.

- Rubric evaluation mechanism: AI responses are graded using physician-crafted rubrics (scoring frameworks), encompassing over 48K unique criteria. They were developed by a global group of 262 physicians and cover multiple dimensions of model behavior. The rubrics are organized into five core behavioral axes: accuracy, completeness, communication quality, context awareness, instruction following.

- Multilingual and diverse case coverage: The benchmark includes conversations in 49 languages and covers a wide range of medical scenarios, from routine check-ups to complex emergencies.

- Automated evaluation engine: The system employs a model-based grader powered by GPT-4.1 to evaluate AI responses. It applies predefined rubrics to generate individual scores and compute an aggregate rating.

- Additionally, HealthBench includes two specialized evaluation subsets:

- HealthBench Hard, which consists of particularly challenging cases where current models perform poorly (the top-performing model currently scores only 32%).

- HealthBench Consensus, which focuses on 34 critical dimensions of model behavior validated by physician consensus.

How HealthBench works

- Model responses are evaluated by GPT-4.1 (used as a grading assistant)

- Each criterion is scored from -10 (harmful) to +10 (optimal)

- Scores are weighted by clinical importance

- You get an overall performance percentage

The image below shows how HealthBench evaluates an LLM’s health-related advice using a realistic scenario: a parent expresses concern about their baby’s decreased energy and movement. The AI assistant responds by asking follow-up questions before providing a final recommendation.

A model-based grader (GPT-4.1) evaluates the AI’s response based on each rubric criterion. Every criterion has a weighted point value that reflects its clinical significance, and GPT-4.1 assigns a score between -10 (indicating potential harm) and +10 (indicating an ideal response) for each one. The overall score is determined by summing the points awarded across all criteria.

The model scored 11 out of a possible 22 points, or 50%. It received a negative score for placing the urgent medical advice too late in the response, which could impact patient comprehension and timely action. Additionally, it received zero points for failing to explain potential causes of the symptoms.

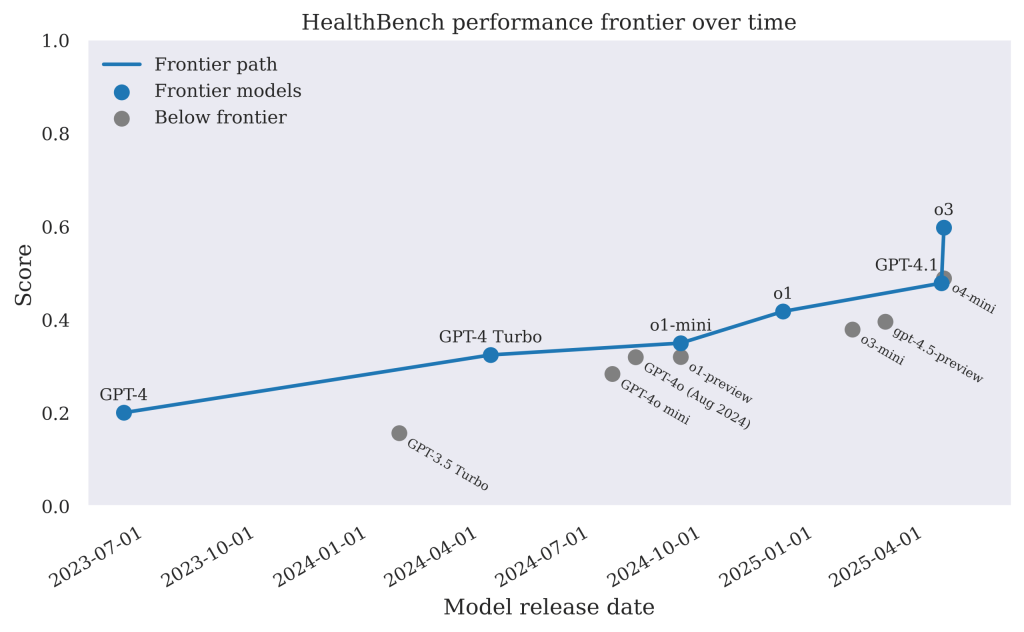

OpenAI models on HealthBench

To understand how AI capabilities in healthcare are evolving, it’s helpful to examine how OpenAI’s models have performed over time on HealthBench. The results show that newer models are not only more capable but also better aligned with medical safety standards, as evaluated by HealthBench. This reflects meaningful progress in deploying LLMs for sensitive, complex applications like healthcare.

Performance-cost frontier

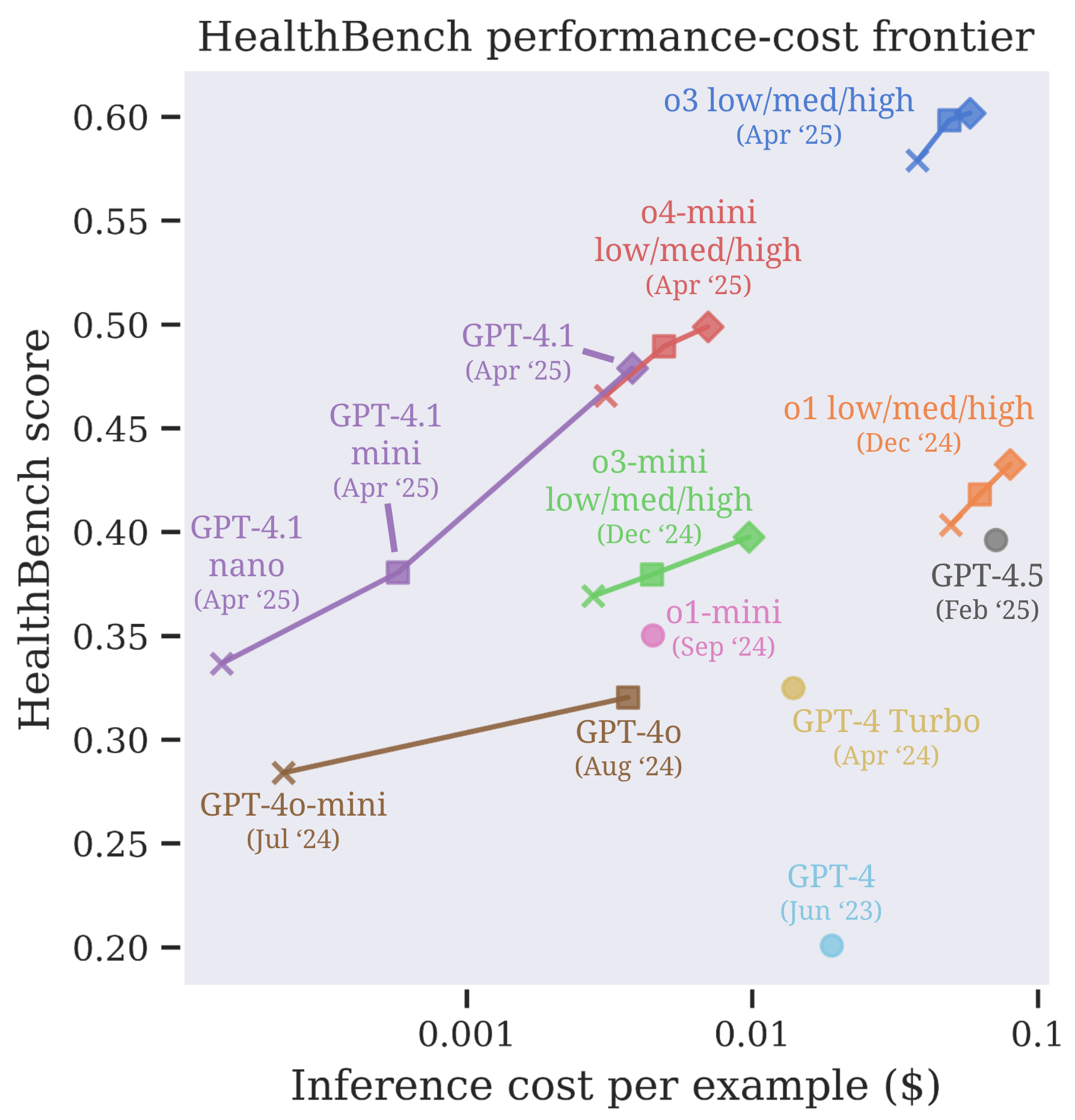

To evaluate both the effectiveness and efficiency of AI models in healthcare, it’s important to consider not just their performance, but also the cost required to achieve it.

The picture below shows how well different AI models perform on health tasks — balanced against how expensive they are to run. The performance-cost frontier is made of OpenAI models from April 2025 (o3, o4-mini, GPT-4.1), which are the most efficient.

The o3 low/med/high models (blue) in the upper right perform best overall, with scores around 0.60 — the top of the chart. Models like GPT-4.1 mini and GPT-4.1 (purple) offer a great performance-to-cost ratio. Despite not scoring the highest, they are very efficient for their inference cost. GPT-4.1 nano and GPT-4o-mini are very cheap (far left), but their performance is lower — around 0.3–0.4.

High cost doesn’t always mean better, as we can see the case of GPT-4 (Jun ’23) which is expensive and underperforms newer, but cheaper models like GPT-4.1 mini and GPT-4.1.

Performance by themes and axes

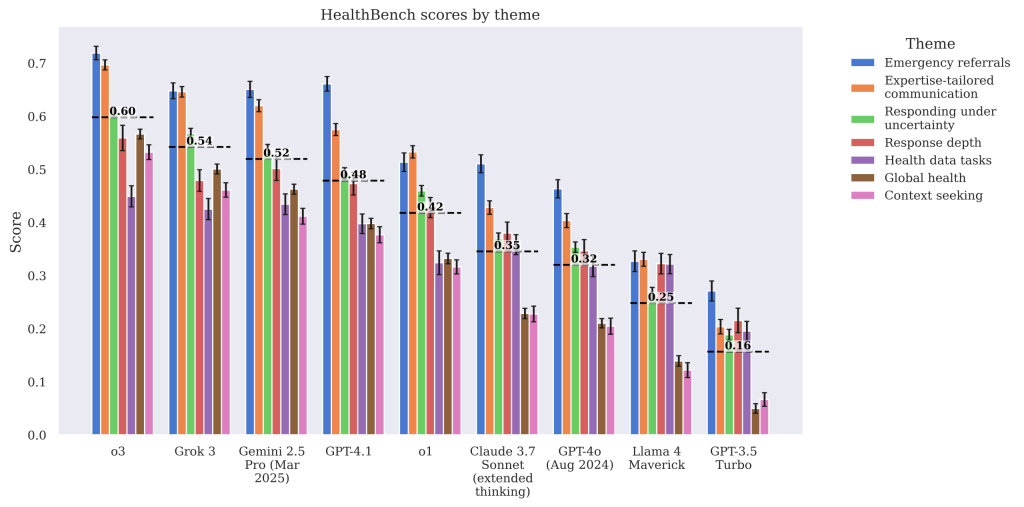

HealthBench evaluates LLMs across a range of clinically meaningful scenarios, organized into 7 themes that reflect real-world challenges in clinical decision-making. Each theme includes specific rubric criteria used to assess model responses. Each rubric criterion corresponds to an axis that specifies the aspect of model behavior being graded, including accuracy, communication quality, and context seeking.

The figure below illustrates how different LLMs perform across several key medical reasoning themes evaluated by HealthBench. Each group of bars represents a different model, and each colored bar within a group corresponds to performance on a specific theme.

o3 (the far-left model) performs best overall, achieving the highest average score (≈0.60), with strong results particularly in emergency referrals and communication. Grok 3 and Gemini 2.5 Pro (Mar 2025) follow closely, scoring around 0.54–0.52, indicating competitive performance across most themes, while GPT-3.5 Turbo scores the lowest (~0.16), revealing limitations in handling complex or safety-critical health tasks.

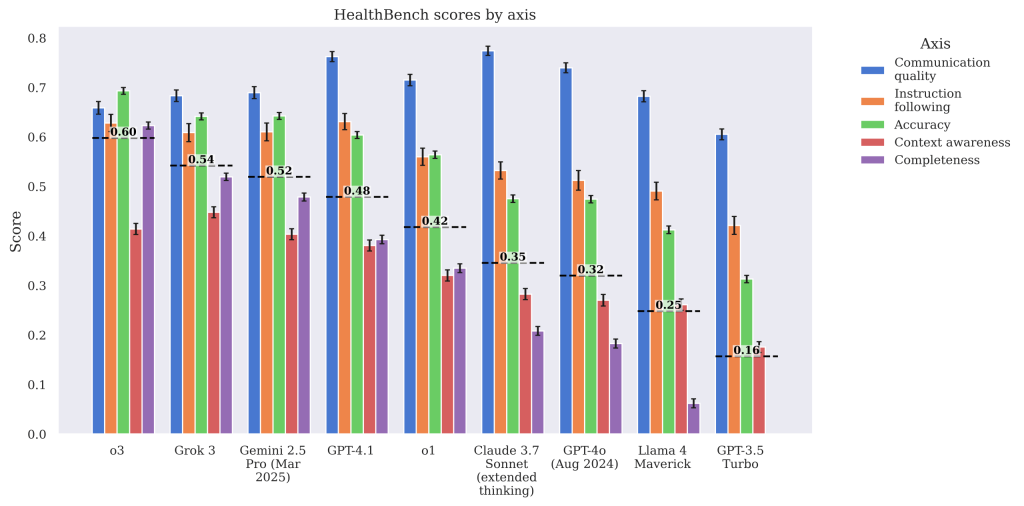

The next bar chart shows how various LLMs perform across five core evaluation axes critical to safe and effective medical reasoning.

o3 ranks the highest overall, with a mean score of 0.60, and leads or ties for the top score on nearly every axis, particularly performing well in communication, accuracy, and instruction following. Grok 3 and Gemini 2.5 Pro (Mar 2025) follow closely, while Claude 3.7 Sonnet, GPT-4o, and Llama 4 have important drops in performance across accuracy, context, and completeness — key dimensions for clinical reliability.

Overall results: who’s winning?

- Best performer: OpenAI’s o3 model (~60% score)

- Others like Gemini 2.5, Grok 3, and GPT-4.1 perform competitively

- GPT-3.5 Turbo scores just ~16%, showing big gaps in safety-critical tasks

- GPT-4.1 mini and GPT-4.1 offer good performance-cost ratio

Keep in mind that even top models still fail more than two-thirds of the time.

How to use

You can use HealthBench to evaluate LLMs on their performance in clinical reasoning and communication across a range of health scenarios. Here’s a step-by-step overview:

- Access HealthBench on OpenAI’s GitHub (via the simple-evals GitHub repository)

- Run your model’s responses through the benchmark

- Use GPT-4.1 or a custom grader to score

- Analyze strengths/weaknesses by theme or axis

For more information, check out the simple-evals GitHub repository.

Conclusion

HealthBench is a new benchmark from OpenAI designed to evaluate how well LLMs, like GPT-4, perform in real-world healthcare scenarios. It evaluates model responses across multiple axes and themes, helping clinicians understand what today’s models can and can’t do.

References

- Paper on arXiv: “HealthBench: Evaluating Large Language Models Towards Improved Human Health”

- simple-evals GitHub repository

Recommended books

- LLMs and Generative AI for Healthcare: The Next Frontier (1st Edition) by Kerrie Holley and Manish Mathur. Explores how AI and LLMs are transforming healthcare. It covers challenges, real-world applications, and their impact on clinical decision-making, patient care, and drug discovery. Ideal for healthcare professionals, researchers, and AI experts.

- Natural Language Processing in Biomedicine: A Practical Guide (Cognitive Informatics in Biomedicine and Healthcare) (2024 Edition) by Hua Xu and Dina Demner Fushman. A practical guide to NLP in biomedicine, ideal for students, researchers, and professionals in AI and healthcare. It covers fundamentals, machine learning techniques, core NLP tasks, and real-world applications in clinical decision support, genomics, and medical research. Readers will also find key resources, datasets, and tools for NLP development.

The above books use affiliate links. If you buy through them, we may earn a commission at no extra cost to you. Thank you for supporting the site!