On January 9, 2023 the PyTorch team announced the public release of Holistic Trace Analysis (HTA), an open source performance analysis and visualization Python library for PyTorch users.

The new tool identifies the performance bottlenecks in distributed training workloads by analyzing traces collected through the PyTorch Profiler, Kineto.

The resource problem

Machine learning researchers and systems engineers frequently struggle to computationally scale up their models, because they are unaware of the performance limitations in their workloads. The resources requested for a job (e.g. GPUs, memory) are often misaligned with the resources with the resources really needed.

Understanding resource usage and bottlenecks for distributed training workloads is crucial for getting the greatest performance out of the hardware stack. With HTA, developers can perform detailed analysis of model performance and identify bottlenecks to optimize computation, memory usage, and inference speed.

Main features of HTA

HTA provides the following features:

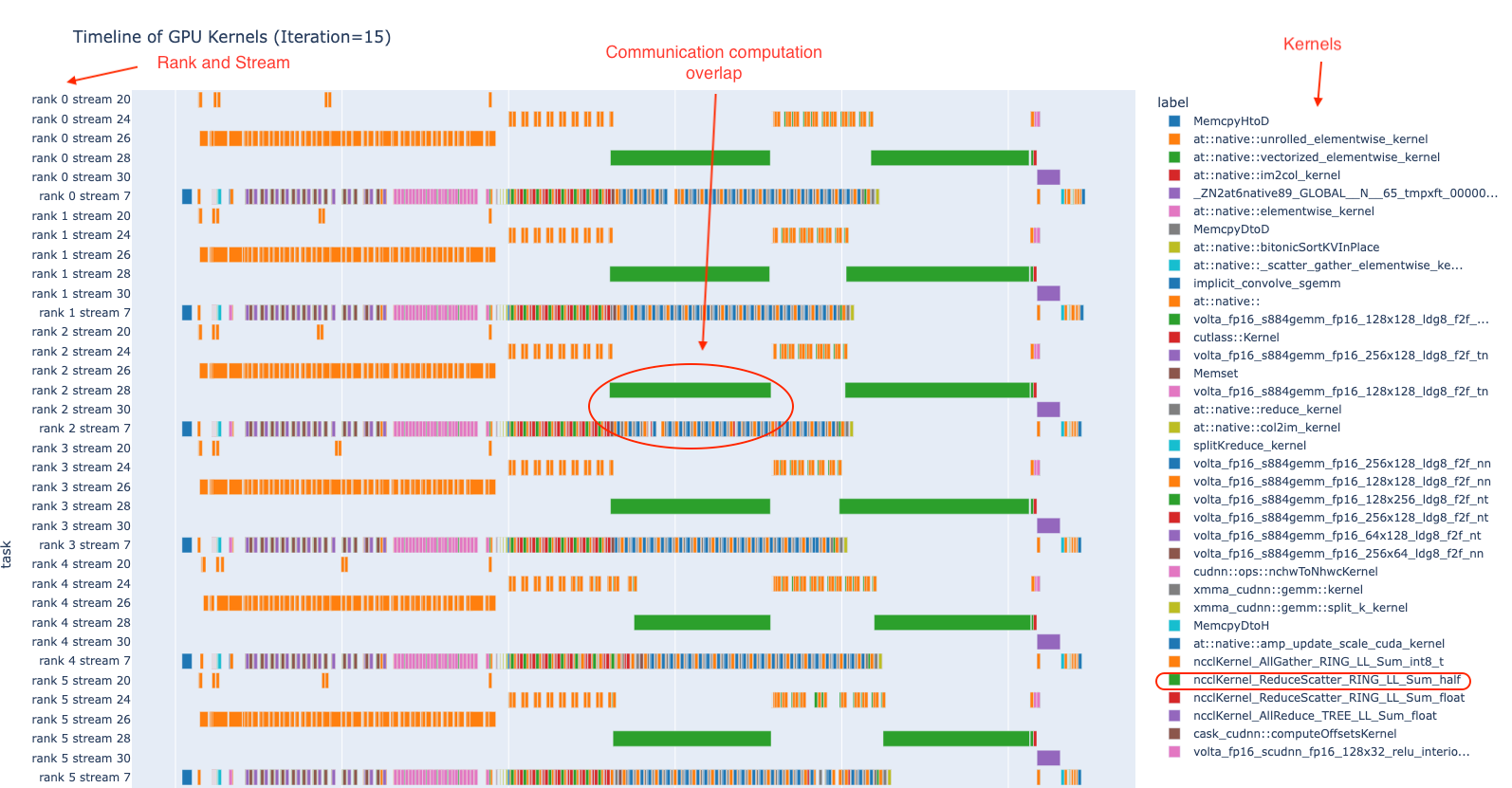

- Breakdown by Dimensions: Temporal Breakdown, Idle Time Breakdown of GPU, Kernel Breakdown, Kernel, Communication Computation Overlap.

- Statistical Analysis: Kernel Duration Distribution, CUDA Kernel Launch Statistics, Augmented Counters (Memory bandwidth, Queue length).

- Patterns: Find the CUDA kernels most frequently launched by any given PyTorch or user defined operator.

- Trace Comparison: A tool to identify and visualize the differences between traces.

Learn more:

- Story source: “PyTorch Trace Analysis for the Masses” (on PyTorch Blog)

- Source code and more details about the HTA library can be found here